Recognizing faces is as natural and habitual as can be for human beings. Even with their undeveloped vision, babies can recognize their mother’s face within days, while adults typically know some 5,000 faces. But what actually happens inside our brains during the process of recognizing a face? How are different facial features encoded in our brains? And can artificial intelligence learn to recognize faces the way humans do?

A new paper from the Weizmann Institute of Science proposes that the face-space geometries that form the foundation for facial recognition in SOTA deep convolutional neural networks (DCNNs) have already evolved to the point that they share pattern similarities with the face-space geometries of cortical face-selective neuronal groups in humans.

The researchers analyzed data collected from 33 epilepsy patients who had previously had electrodes implanted in their brains for diagnosis purposes. They showed the volunteers facial pairs and monitored the part of the brain responsible for face perception. While some pairs elicited similar brain activity patterns, other face pairs elicited patterns that differed greatly from one another.

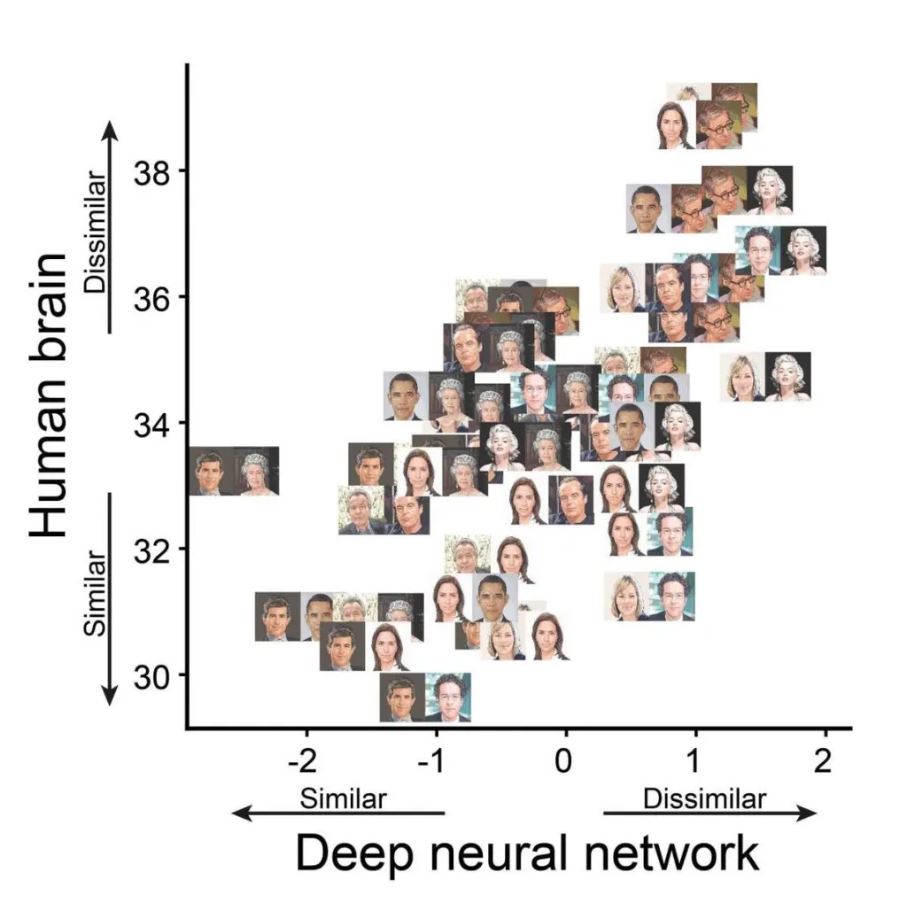

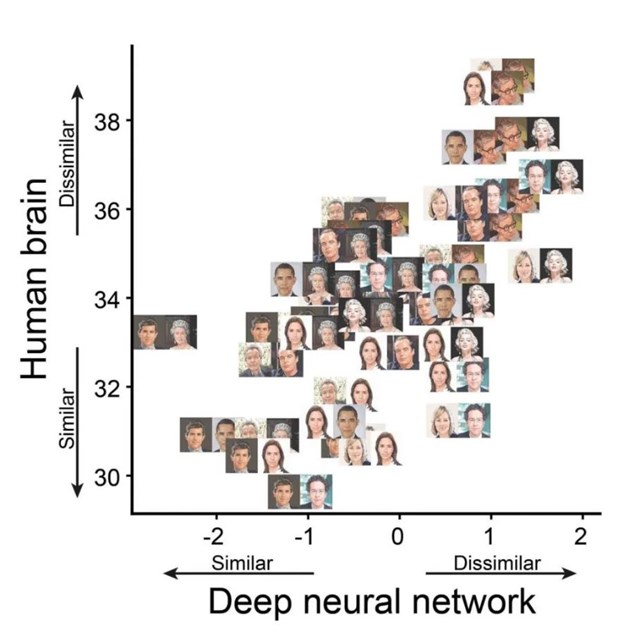

Plotted by similarity, most face pairs cluster close to the diagonal, showing a strong parallel between the way the brain and the network encode faces.

When researchers showed the same face pairs to DCNNs, they found the facial similarities overlapped with the humans’ perceptions most in the network layers that represent pictorial appearance of the faces.

“It’s highly informative that two such drastically different systems — a biological and an artificial one, that is, the brain and a deep neural network — have evolved in such a way that they possess similar characteristics,” says Prof. Rafi Malach from the research team. “I would call this convergent evolution — just as human-made airplanes show similarity to those of wings of insects, birds and even mammals. Such convergence points to the crucial importance of unique face-coding patterns in face recognition.”

The researchers conclude that “employing the rapidly evolving new DCNNs may help in the future to resolve outstanding issues such as the functionalities of distinct cortical patches in high order visual areas and the role of top down and local recurrent processing in brain function”.

IBM has also been probing the brain for ways to improve machine learning — earlier this year researchers there proposed a neuroscientific pattern inspired method for boosting the learning process in deep neural networks. The team also released an implementation of their biological neural networks on GitHub.

The Paper Convergent Evolution of Face Spaces Across Human Face-Selective Neuronal Groups and Deep Convolutional Networks is available on Nature Communications.